Tags

"Citizens United", AI, Artificial Intelligence, biotech, chatgpt, chess, cognitive computing, Democracy, emotional intelligence, ethics, HCI, life, prediction, psychokinesis, technology, the singularity, truth, Turing, USA, UX

Abracadabra!

Here’s the thing.

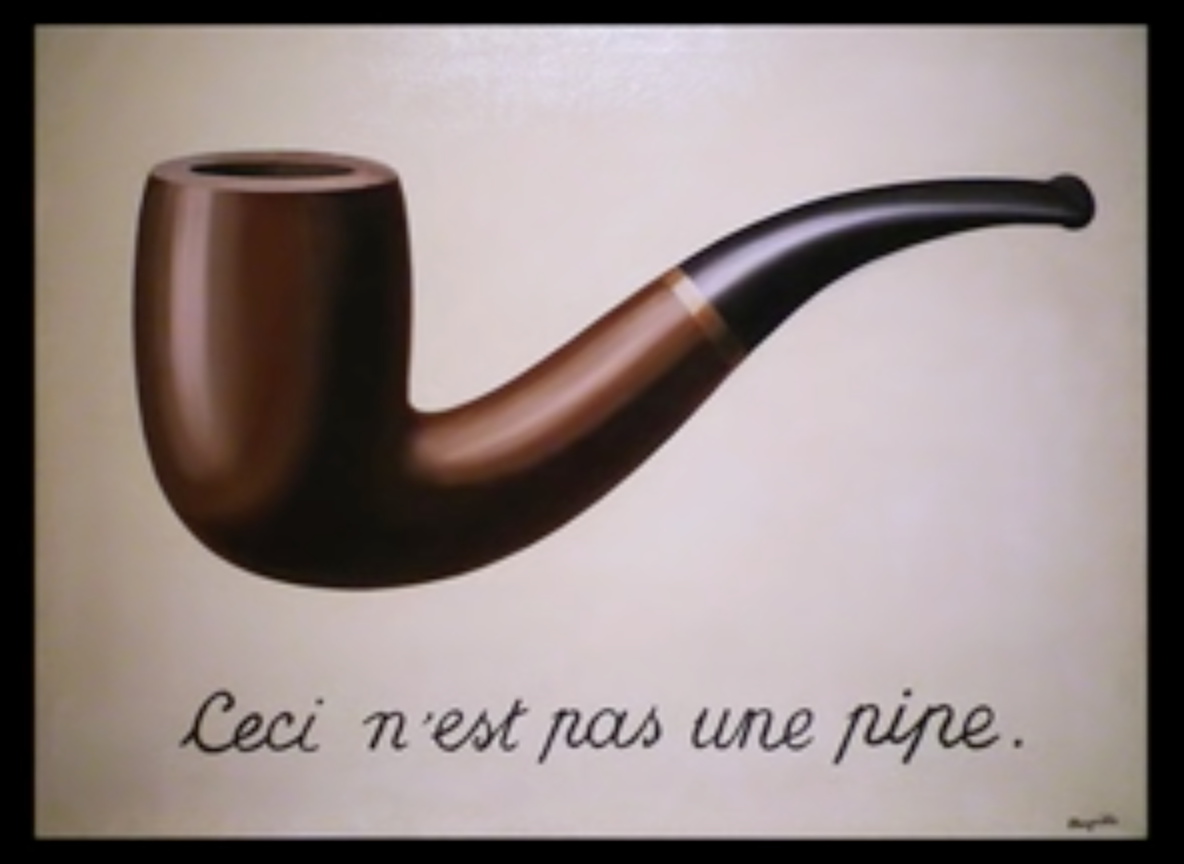

There is no magic.

Of course, there is the magic of love and the wonder at the universe and so there is metaphorical magic. But there is no physical magic and no mathematical magic. Why do we care? Because in most science fiction scenarios, when super-intelligence happens, whether it is artificial or humanoid, magic happens. Not only can the super-intelligent person or computer think more deeply and broadly, they also can start predicting the future, making objects move with their thoughts alone and so on. Unfortunately, it is not just in science fiction that one finds such impossibilities but also in the pitches of companies about biotech and the future of artificial intelligence. Now, don’t get me wrong. Of course, there are many awesome things in store for humanity in the coming millennia, most of which we cannot even anticipate. But the chances of “free unlimited energy” and a computer that will anticipate and meet our every need are slim indeed.

This all-too popular exaggeration is not terribly surprising. I am sure much of what I do seems quite magical to our cats. People in possession of advanced or different technology often seem “magical” to those with no familiarity with the technology. But please keep in mind that making a human brain “better”, whether by making it bigger, or have more connections, or making it faster —- none of these alterations will enable the brain to move objects via psychokinesis. Yes, the brain does produce a minuscule amount of electricity, but way too little to move mountains or freight trains.

Of course, machines can be built to wield a lot of physical energy, but it isn’t the information processing part of the system that directly causes something in the physical world. It is through actuators of some type, just as it is with animals. Of course, super-intelligence could make the world more efficient. It is also possible that super-intelligence might discover as yet undiscovered forces of the universe. If it turns out that our understanding of reality is rather fundamentally flawed, then all bets are off. For example, if it turns out that there are twelve fundamental forces in the universe (or, just one), and a super-intelligent system determines how to use them, it might be possible that there is potential energy already stored in matter which can be released by the slightest “twist” in some other dimension or using some as yet undiscovered force. This might appear to human beings who have never known about the other 8 forces let alone how to harness them as “magic.”

There is another more subtle kind of “magic” that might be called mathematical magic. As known for a long time, it is theoretically possible to play perfect chess by calculating all possible moves, and all possible responses to those moves, etc. to the final draws and checkmates. It has been calculated that such an enumberation of contingencies would not be possible even if the entire universe were a nano-computer operating in parallel since the beginning of time. There are many similar domains. Just because a person or computer is way, way smarter does not mean they will be able to calculate every possibility in a highly complex domain.

Of course, it is also possible that some domains might appear impossibly complex but actually be governed by a few simple, but extremely difficult to discover laws. For instance, it might turn out that one can calculate the precise value of a chess position (encapsulating all possible moves implicitly) through some as yet undiscovered algorithm written perhaps in an as yet undesigned language. It seems doubtful that this would be true of every domain, but it is hard to say a priori.

There is another aspect of unpredictability and that has to do with random and chaotic effects. Imagine trying to describe every single molecule of earth’s seas and atmosphere in terms of it’s motion and position. Even if there were some way to predict state N+1 from N, we would have to know everything about state N. The effects of the slightest miscalculation or missing piece of data could be amplified over time. So long term predictions of fundamentally chaotic systems like weather, or what your kids will be up to in 50 years, or what the stock market will be in 2600 are most likely impossible, not because our systems are not intelligent enough but because such systems are by their nature not predictable. In the short term, weather is largely, though not entirely, predictable. The same holds for what your kids will do tomorrow or, within limits, what the stock market will do. The ability to do long term prediction is quite different.

In The Sciences of the Artificial, Herb Simon provides a nice thought experiment about the temperature in various regions of a closed space. I am paraphrasing, but imagine a dormitory with four “quads.” Each quad has four rooms and each room is partitioned into four areas with screens. The screens are not very good insulators so if the temperature in these areas differ, they will quickly converge. In the longer run, the temperature will tend toward average in the entire quad. In the very long term, if no additional energy is added, the entire dormitory will tend toward the global average. So, when it comes to many kinds of interactions, nearby interactions dominate, but in the long term, more global forces come into play.

Now, let us take Simon’s simple example and consider what might happen in the real world. We want to predict what the temperature is in a particular partitioned area in 100 years. In reality, the dormitory is not a closed system. Someone may buy a space heater and continually keep their little area much warmer. Or, maybe that area has a window that faces south. But it gets worse. Much worse. We have no idea whether this particular dormitory will even exist in 100 years. It depends on fires, earthquakes, and the generosity of alumni. In fact, we don’t even know whether brick and mortar colleges in general will exist in 100 years. Because as we try to predict in longer and longer time frames, not only do more distant factors come into play in terms of physical distance. The determining factors are also distant conceptually. In a 100 year time frame, the entire college may or may not exist and we don’t even know whether the determining factor(s) will be financial, astronomical, geological, political, social, physical or what. This is not a problem that will be solved via “Artificial Intelligence” or by giving human beings “better brains” via biotech.

Whoa! Hold on there. Once again, it is possible that in some other dimension or using some other as yet undiscovered force, there is a law of conservation so that going “off track” in one direction causes forces to correct the imbalance and get back on track. It seems extremely unlikely, but it is conceivable that our model of how the universe works is missing some fundamental organizing principle and what appears to us as chaotic is actually not.

The scary part, at least to me, is that some descriptions of the wonderful world that awaits us (once our biotech or AI start-up is funded) is that that wonderful world depends on their being a much simpler, as yet unknown force or set of forces that is discoverable and completely unanticipated. Color me “doubting Thomas” on that one.

It isn’t just that investing in such a venture might be risky in terms of losing money. It is that we humans are subject to blind pride that makes people presume that they can predict what the impact of making a genetic change will be, not just on a particular species in the short term, but on the entire planet in the long run. We can indeed make small changes in both biotech and AI and see improvements in our lives. But when it comes to recreating dinosaurs in a real life Jurassic Park or replacing human psychotherapists with robotic ones, we really cannot predict what the net effect will be. As humans, we are certainly capable of containing and testing and imagining possibilities and slowly testing them as we introduce them. Yeah. That could happen. But…

What seems to actually happen, however, is that companies not only want to make more money; they want to make more money now. We have evolved social and legal and political systems that put almost no brakes on runaway greed. The result is that more than one drug has been put on the market that has had a net negative effect on human health. This is partly because long term effects are very hard to ascertain, but the bigger cause is unbridled greed. Corporations, like horses, are powerful things. You can ride farther and faster on a horse. And certainly corporations are powerful agents of change. But the wise rider is master or partner with a horse. They don’t allow themselves to be dragged along the ground by rope and let the horse go wherever it will. Sadly, that is precisely the position that society is vis a vis corporations. We let them determine the laws. We let them buy elections. We let them control virtually every news medium. We no longer use them to get amazing things done. We let them use us to get done what they want done. And what is that thing that they want done? Make hugely more money for a very few people. Despite this, most companies still manage to do a lot of net good in the world. I suspect this is because human beings are still needed for virtually every vital function in the corporation.

What will happen once the people in a corporation are no longer needed? What will happen when people who remain in a corporation are no longer people as we know them, but biologically altered? It is impossible to predict with certainty. But we can assume that it will seem to us very much like magic.

Very.

Dark.

Magic.

Abracadabra!

How the Nightingale Learned to Sing

The Midnight Flight to Crazytown

Who Won the War?

We Won the War! We Won the War!

Tools of Thought: And then what?