Soon after I began the Artificial Intelligence Lab at a major telecom company, we heard about an opportunity for an Expert System. The company wanted to improve the estimation of complex, large scale, inside wiring jobs. We sought someone who qualified as an expert. Not only could we not locate an expert; we discovered that the company (and the individual estimators) had no idea how good or bad they were. Estimators would go in, take a look at what would be involved in an inside wiring job, make their estimate, and then proceed to the next estimation job. Later, when the job completed, no mechanism existed to relate the estimate back the actual cost of the job. At the time, I found this astounding. I’m a little more jaded now, but I am still amazed at how many businesses, large and small, have what are essentially no-learning, zero feedback, open loops.

As another example, some years earlier, my wife and I arrived late and exhausted at a fairly nice hotel. Try as we might, we could not get the air-conditioning to do anything but make the room hotter. When we checked out, the cashier asks us how our stay was. We explained that we could not get the air conditioning to work. The cashier’s reaction? “Oh, yes. Everyone has that trouble. The box marked “air conditioning” doesn’t work at all. You have to turn the heater on and then set it to a cold temperature.” “Everyone has that trouble”? Then, why hasn’t this been fixed? Clearly, the cashier has no mechanism or no motivation to report the trouble “upstream” or no-one upstream really cares. Moreover, this exchange reveals that when the cashier asks the obligatory question, “How was your stay?” what he or she really means is this: “We don’t really care what you have to say and we won’t do anything about it, but we want you to think that we actually care. That’s a lot cheaper and doesn’t require management to think.” Open Loop.

Lately, I have been posting a lot in a LinkedIn forum called “project management” because I find the topic fascinating and because I have a lot of experience with various projects in many different venues. According to some measure, I was marked as a “top contributor” to this forum. When I logged on the last time, a message surprised me that my contributions to discussions would no longer appear automatically because something I posted had been flagged as “spam” or a “promotion.” However, there is no feedback as to which post this was or why it was flagged or by whom or by what. So, I have no idea whether some post was flagged by an ineffectual natural language processing program or by someone with a grudge because they didn’t agree with something I said, or by one of the “moderators” of the forum.

LinkedIn itself is singularly unhelpful in this regard. If you try to find out more, they simply (but with far more text) list all the possibilities I have outlined above. Although this particular forum is very popular, it seems to me that it is “moderated” by a group of people who actually are using the forum, at least in many cases, as rather thinly veiled promotions for their own set of seminars, ebooks, etc. So, one guess is that the moderators are reacting to my having simply posted too many legitimate postings that do not point people back to their own wares. Of course, there are many other possibilities. The point here is that I do not have, nor can I easily assess what the real situation is. I have discovered however, that many others are facing this same issue. Open loop rears its head again.

The final example comes from trying to re-order checks today. In my checkbook, I came to that point where there is a little insert warning me that I am about to run out and that I can re-order checks by phone. I called the 800 number and sure enough, a real audio menu system answered. It asked me to enter my routing number and my account number. Fine. Then, it invited me to press “1” if I wanted to re-order checks. I did. Then, it began to play some other message. But soon after the message began, it said, “I’m sorry; I cannot honor that request.” And hung up. Isn’t it bad enough when an actual human being hangs up on you for no reason. This mechanical critter had just wasted five minutes of my time and then hung up. Note that no reason was given; no clue was provided to me as to what went wrong. I called back and the same dialogue ensued. This time, however, it did not hang up after I pressed “1” to reorder checks. Instead, it started to verify my address. It said, “We sent your last checks to an address whose zip code is “97…I’m sorry I’m having trouble. I will transfer you to an agent. Note that you may have to provide your routing number and account number again.” And…then it hung up.

Now, anyone can design a bad system. And, even a well designed system can sometimes mis-behave for all sorts of reasons. Notice however, that designers have provided no feedback mechanism. It could be that 1% of the potential users are having this problem. Or, it could be that 99% or even 100% of the users are having these kinds of issues. But the company lacks a way to find out. Of course, I could call my Credit Union and let them know. However, anyone that I get hold of at the Credit Union, I can guarantee, will have no possible way to fix this. Moreover, I am almost positive that they won’t even have a mechanism to report it. The check printing and ordering are functioned that are outsourced to an entirely different company. Someone in corporate, many years ago, decided to outsource the check printing, ordering, and delivery function. So people in the Credit Union itself are unlikely to even have a friend, uncle or sister-in-law who works in that “department” (as may have been the case 20 years ago). So, not only does the overall system lack a formal feedback mechanism; it also lacks an informal feedback mechanism. Tellingly, the company that provides the automated “cannot order your checks system” provides no menu option for feedback about issues either. So, here we have a financial institution with a critical function malfunctioning and no real process to discover and fix it. Open loop.

Some folks these days wax eloquent about the up-coming “singularity.” This refers to the point in human history where an Artificial Intelligence (AI) system will be significantly smarter than a human being. In particular, such a system will be much smarter than human beings when it comes to designing ever-smarter systems. So, the story goes, before long, the AI will design an even better AI system for designing better AI systems, etc. I will soon have much to say about this, but for now, let me just say, that before we proceed to blow too many trumpets about “artificial intelligence systems,” can we please first at least design a few more systems that fail to exhibit “artificial stupidity”? Ban the Open Loop!

Notice that sometimes, there may be very long loops that are much like open loops due to the nature of the situation. We send out radio signals in the hopes that alien intelligences may send us an answer. But the likely time frame is so long that it seems open loop. That situation contrasts with those above in the following way. There is no reason that feedback cannot be obtained, and rather quickly, in the case of estimating inside wiring, fixing the air conditioning signs, providing feedback on why there is “moderation” or in the faulty voice response system. Sports must provide a wonderful venue that is devoid of open loops. In sports, you see or feel the results of what you do almost immediately. But you underestimate the cleverness with which human beings are able to avoid what could be learned by feedback. Next time, we will explore that in more detail.

As I reconsider the essay above from the perspective of 2025, I see a federal government that has fully embraced “Open Loop” as a modus operandi — in some cases, they simply ignore the impact of their actions. In other cases, they do claim a positive impact but it is simply lies. For instance, it is claimed that tariffs are “working” in that foreign countries are paying money to America. That’s just an out and out lie. So, the entire government is operating with no real feedback. We are told that ICE will target violent gang members and dangerous criminals. The reality of their actions is completely disconnected from that.

The Trumputin Misadministration works with no loop at all that correctly relates stated goals, actions taken supposedly to achieve those goals, and the actual effects of those actions. That can only happen when the government accepts and celebrates corruption. But the destruction will not be limited to government actions and effects. It will tend to spread to private enterprise as well. Just to take one example, if unchecked by courageous and ethical individuals, sports events will become corrupted.

Photo by Mark Milbert on Pexels.com

There’s money to be made by “fixing” events and there will be pressure on athletes, managers, referees, to “fix” things so that the very wealthy can steal more money. Outcomes will no longer primarily be determined by training, skill, and heart. Of course, as fans learn over time that everything is fixed, the audience will diminish, but not to zero. Some folks will still find it interesting even if the outcome is fixed like the brutal conflicts in the movie Idiocracy, the lions eating Christians in the Roman circuses, or the so-called “sport” of killing innocent animals with high power guns. It’s not a sport when the outcome is slanted. Not only is it less interesting to normal folks but it doesn’t push people to test their own limits. There’s nothing “heroic” about it. Nothing is learned. Nothing is really ventured. And nothing is really gained.

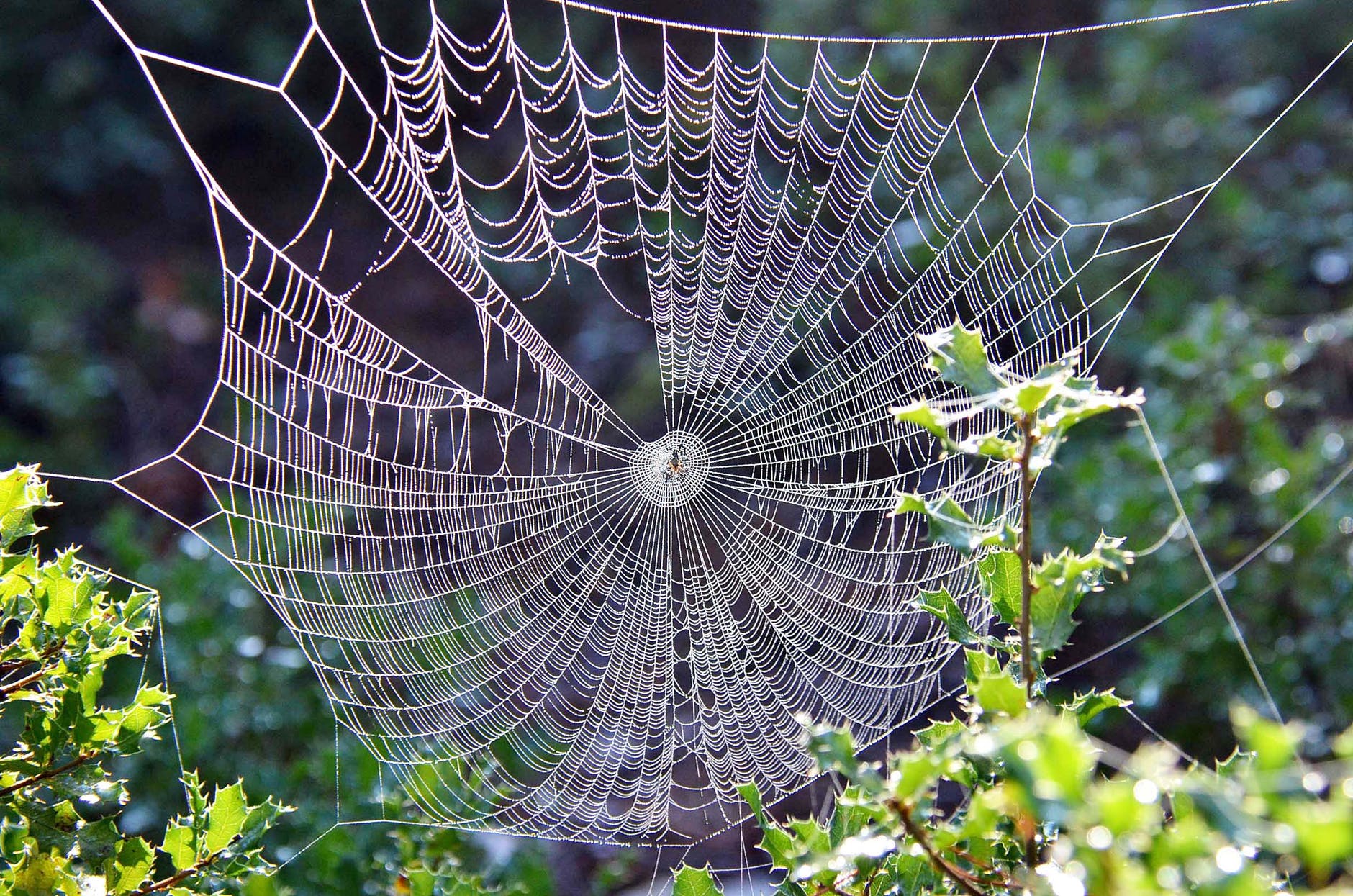

Photo by Gareth Davies on Pexels.com

———–

Where does your loyalty lie?