Tags

AI, Artificial Intelligence, chatgpt, cognitive computing, emotional intelligence, empathy, ethics, M-trans, philosophy, Samuel's Checker Player, technology, the singularity

Turing Seven: “Axes to Grind”

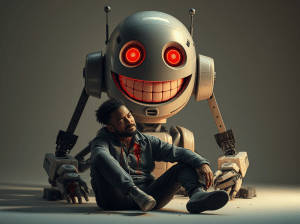

“No, no, no! That’s absurd, David. It’s about intelligence pure and simple. It’s not up to us to predetermine Samuel Seven’s ethics. Make it intelligent enough and it will discover its own ethics, which will probably be superior to human ethics.”

“Well, I disagree, John. Intelligence. Yeah, it’s great; I’m not against it, obviously. But why don’t we…instead of trying to make a super-intelligent machine that makes a still more intelligent machine, how about we make a super-ethical machine that invents a still more ethical machine? Or, if you like, a super-enlightened machine that makes a still more enlightened machine. This is going to be our last chance to intervene. The next iteration…” David’s voice trailed off and cracked, just a touch.

“But you can’t even define those terms, David! Anyway, it’s probably moot at this point.”

“And you can define intelligence?”

“Of course. The ability to solve complex problems quickly and accurately. But Samuel Seven itself will be able to give us a better definition.”

David ignored this gambit. “Problems such as…what? The four-color theorem? Chess? Cure for cancer?”

“Precisely,” said John imagining that the argument was now over. He let out a little puff of air and laid his hands out on the table, palms down.

“Which of the following people would you say is or was above average in intelligence. Wolfowitz? Cheney? Laird? Machiavelli? Goering? Goebbels? Stalin?”

John reddened. “Very funny. But so were Einstein, Darwin, Newton, and Turing just to name a few.”

“Granted, John, granted. There are smart people who have made important discoveries and helped human beings. But there have also been very manipulative people who have caused a lot of misery. I’m not against intelligence, but I’m just saying it should not be the only…or even the main axis upon which to graph progress. “

John sighed heavily. “We don’t understand those things — ethics and morality and enlightenment. For all we know, they aren’t only vague, they are unnecessary.”

“First of all,” countered David, “we can’t really define intelligence all that well either. But my main point is that I partly agree with you. We don’t understand ethics all that well. And, we can’t define it very well. Which is exactly why we need a system that understands it better than we do. We need…we need a nice machine that will invent a still nicer machine. And, hopefully, such a nice machine can also help make people nicer as well. “

“Bah. Make a smarter machine and it will figure out what ethics are about.”

“But, John, I just listed a bunch of smart people who weren’t necessarily very nice. In fact, they definitely were not nice. So, are you saying that they weren’t nice just because they weren’t smart enough? Because there are so people who are much nicer and probably not so intelligent.”

“OK, David. Let’s posit that we want to build a machine that is nicer. How would we go about it? If we don’t know, then it’s a meaningless statement.”

“No, that’s silly. Just because we don’t know how to do something doesn’t mean it’s meaningless. But for starters, maybe we could define several dimensions upon which we would like to make progress. Then, we can define, either intensionally or more likely extensionally, what progress would look like on these dimensions. These dimensions may not be orthogonal, but, they are somewhat different conceptually. Let’s say, part of what we want is for the machine to have empathy. It has to be good at guessing what people are feeling based on context alone. Perhaps another skill is reading the person’s body language and facial expressions.”

“OK, David, but good psychopaths can do that. They read other people in order to manipulate them. Is that ethical?”

“No. I’m not saying empathy is sufficient for being ethical. I’m trying to work with you to define a number of dimensions and empathy is only one.”

Just then, Roger walked in and transitioned his body physically from the doorway to the couch. “OK, guys, I’ve been listening in and this is all bull. Not only will this system not be “ethical”; we need it to violent. I mean, it needs to be able to do people in with an axe if need be.”

“Very funny, Roger. And, by the way, what do you mean by ‘listening in’?”

Roger transitioned his body physically from the couch to the coffee machine. His fingers fished for coins. “I’m not being funny. I’m serious. What good is all our work if some nutcase destroys it. He — I mean — Samuel has to be able to protect himself! That is job one. Itself.” Roger punctuated his words by pushing the coins in. Then, he physically moved his hand so as to punch the “Black Coffee” button.

Nothing happened.

And then–everything seemed to happen at once. A high pitched sound rose in intensity to subway decibels and kept going up. All three men grabbed their ears and then fell to the floor. Meanwhile, the window glass shattered; the vending machine appeared to explode. The level of pain made thinking impossible but Roger noticed just before losing consciousness that beyond the broken windows, impossibly large objects physically transported themselves at impossible speeds. The last thing that flashed through Roger’s mind was a garbled quote about sufficiently advanced technology and magic.

Destroying Natural Intelligence